Convolution 2D

Convolution

info

CNN 설명에서, 대부분의 그림은 cross-correlation �을 나타내는 것 같습니다.

Kernel example

Forward-propagation

in_channels=1,

out_channels(filters)=1,

kernel_size=3,

stride=1,

padding=1,

dilation=1,

bias=False

in_channels=1,

out_channels(filters)=1,

kernel_size=3,

stride=2,

padding=0,

dilation=1,

bias=False

in_channels=1,

out_channels(filters)=1,

kernel_size=3,

stride=1,

padding=0,

dilation=2,

bias=False

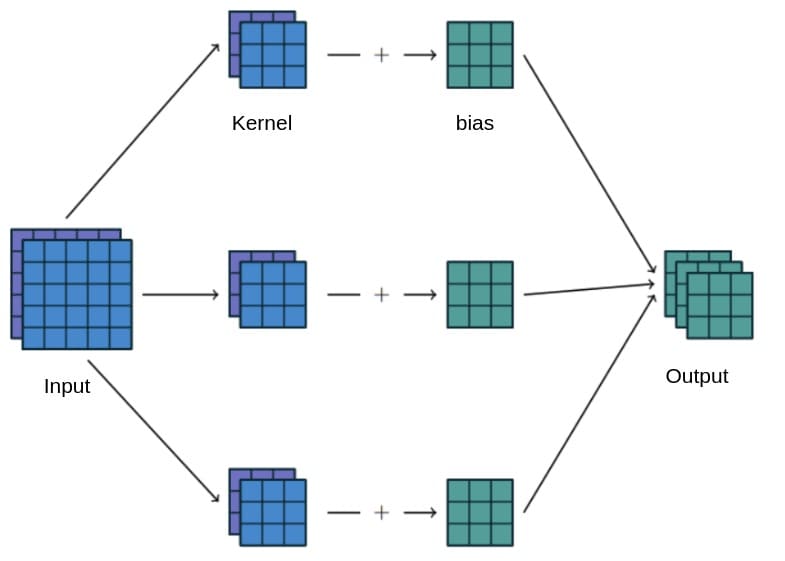

in_channels=2,

out_channels(filters)=3,

kernel_size=3,

stride=1,

padding=0,

dilation=1,

bias=True

Back-propagation

Reference

- https://www.jefkine.com/general/2016/09/05/backpropagation-in-convolutional-neural-networks/

- https://towardsdatascience.com/a-comprehensive-introduction-to-different-types-of-convolutions-in-deep-learning-669281e58215

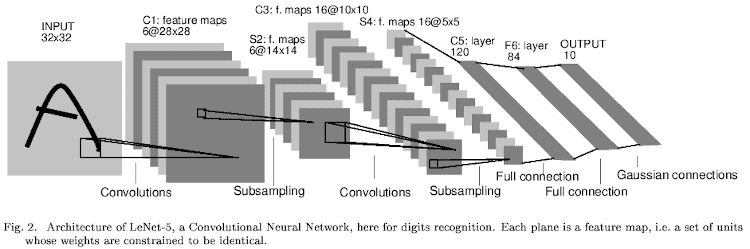

- Gradient-Based Learning Applied to Document Recognition(LeNet 5)

- A guide to convolution arithmetic for deep learning